November 30, 2025 • Lockhead

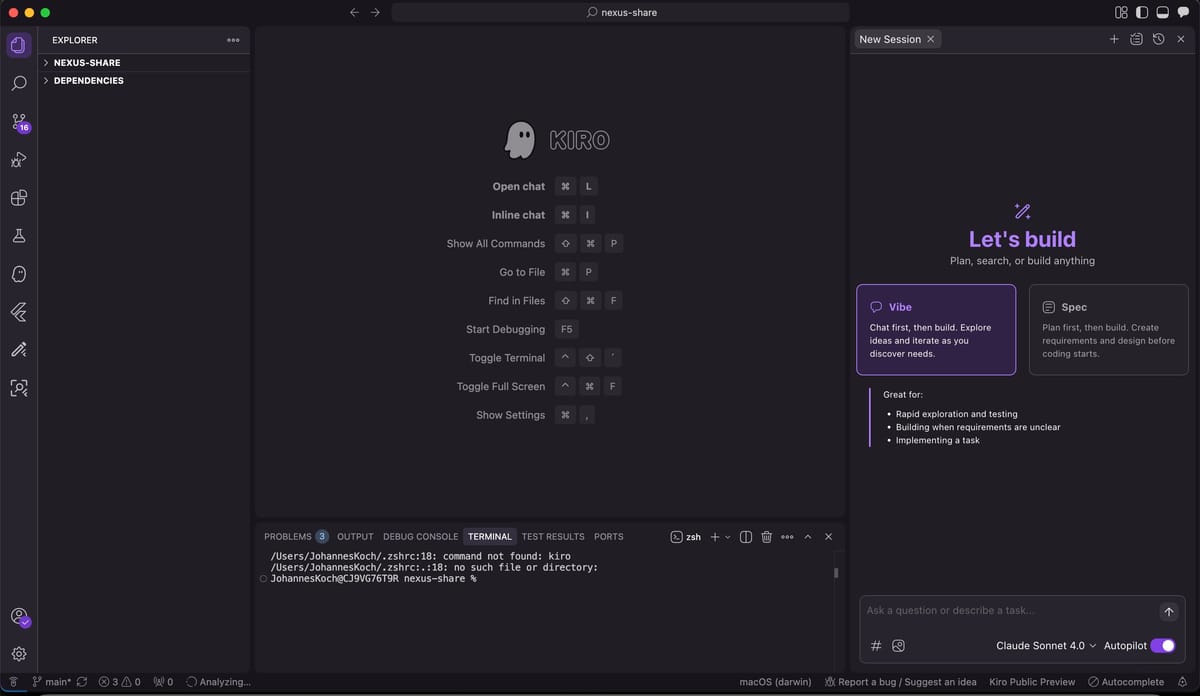

pre:Invent 2025 - Why Kiro is so important for AWS

It's pre:Invent 2025 - Why Kiro is so important for AWS

It's pre:Invent 2025 - Why Kiro is so important for AWS

Stay updated with the latest articles and news. No spam, I promise!